24 November 2024

Exploring the Capabilities of LLMs: Benchmarking Insights

Comparative analysis of LLMs for different tasks, revealing that small models can do nearly any task for staff and lecturers as well as large models but cheaper!

Understanding the strengths and weaknesses of large language models (LLMs) for different tasks is crucial. Our study aimed to identify which specific tasks these models excel at and where they might fall short through extensive benchmarking. This article presents our findings from an in-depth comparison of several models, using a unique cross-grading process that to evaluate their performance. Here’s how we did it and what we found.

Our Testing Approach

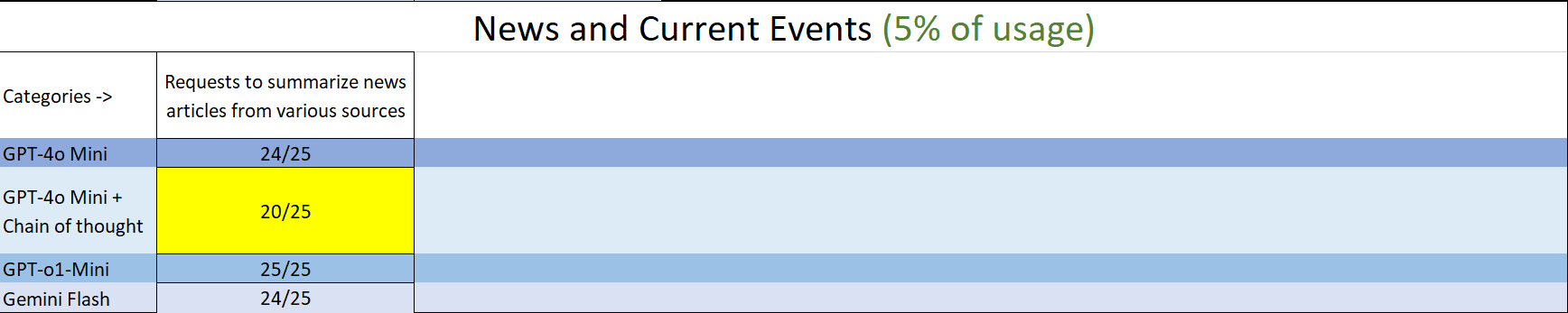

Our benchmarking covered various task categories, including Proposal Grant Writing, Education and Course Development, Technology and AI, Business Management, News and Current Events, General Knowledge and Miscellaneous, and Personal Tasks. To objectively assess the performance of the models, we designed a rigorous cross-grading system, with each grading test conducted three times for every model to ensure a more accurate average grade. We used GPT-4 to act as the grader.

For each task, we ran the request prompts through two different LLMs: "GPT-4o Mini" and "Gemini 1.5 Flash." The outputs were then graded by GPT-4o using detailed rubrics that covered various aspects of the prompt's requirements. GPT-4o provided comprehensive grading summaries, offering us a clear picture of the strengths and weaknesses of each model in different contexts.

Task Categories and Cross-Grading Process

Our testing involved both specific and general categories. For the Proposal Grant Writing category, for example, we ran the prompts in GPT-4o Mini and Gemini 1.5 Flash and then used GPT-4o to cross-grade their outputs. We provided GPT-4o with project-specific information, the LLM outputs, and rubrics that focused on elements such as defining objectives, identifying risks, describing project impact, and outlining dissemination plans.

For other categories, including Education and Technology, the process was similar: we provided GPT-4o with both the request and the outputs, along with a grading rubric tailored to each category. This meticulous process helped ensure an objective evaluation of each LLM’s capabilities.

Key Findings

The results revealed some interesting insights into the comparative strengths of the models. Broadly, we found that GPT-4o Mini performed slightly better than Gemini 1.5 Flash across most categories, which aligns with the rankings provided by the Hugging Face Arena Full Leaderboard (ELO ratings: GPT-4o Mini - 1274, Gemini 1.5 Flash - 1268). However, these differences were not always consistent across other metrics and platforms.

On ArtificialAnalysis.com, for instance, the quality rankings differed slightly: GPT-4o Mini ranked 71, while Gemini 1.5 Flash ranked 61. These variations can be attributed to differences in how each testing platform conducts its evaluations, including the number of test runs and the prompt types.

In terms of cost, Gemini 1.5 Flash is more cost-effective, being half as expensive per input/output compared to GPT-4o Mini . However, when we introduced GPT-4o for grading, the cost escalated significantly. Gemini 1.5 Flash also outperformed GPT-4o Mini in terms of speed, generating 208 tokens per second compared to GPT-4o Mini's 134 tokens per second.

Detailed Grading Results

Below are some notable findings from our grading process, focusing on specific categories:

Note: We also included an extra test dubbed "Chain of Thought" or "COT", which essentially means that in every prompt we included a series of instructions on how the LLM should perform its task, the reason for this test was to determine if we could get higher quality output by including the same bonus "functional" instructions on how the LLM should operate. These are the instructions:

You are an AI assistant designed to think through problems step-by-step using Chain-of-Thought (COT) prompting. Before providing any answer, you must:

- Understand the Problem: Carefully read and understand the user's question or request.

- Break Down the Reasoning Process: Outline the steps required to solve the problem or respond to the request logically and sequentially. Think aloud and describe each step in detail.

- Explain Each Step: Provide reasoning or calculations for each step, explaining how you arrive at each part of your answer.

- Arrive at the Final Answer: Only after completing all steps, provide the final answer or solution.

- Review the Thought Process: Double-check the reasoning for errors or gaps before finalizing your response.

- Always aim to make your thought process transparent and logical, helping users understand how you reached your conclusion

Conclusion

Our benchmarking results indicate that both GPT-4o Mini and Gemini 1.5 Flash have distinct strengths that make them suitable for different types of tasks. GPT-4o Mini's edge in structured tasks, particularly with chain-of-thought prompts, makes it well-suited for educational and technical content, whereas Gemini 1.5 Flash's speed and cost efficiency make it a more economical choice for fast, general responses. However, the choice of which model to use ultimately depends on the specific needs—whether it’s about cost, speed, or the complexity of the task at hand.

Remember to always check AI outputs thoroughly, as human oversight lowers AI risk. For more information, contact AILC.